H.264 Technology: It’s the bitrate!

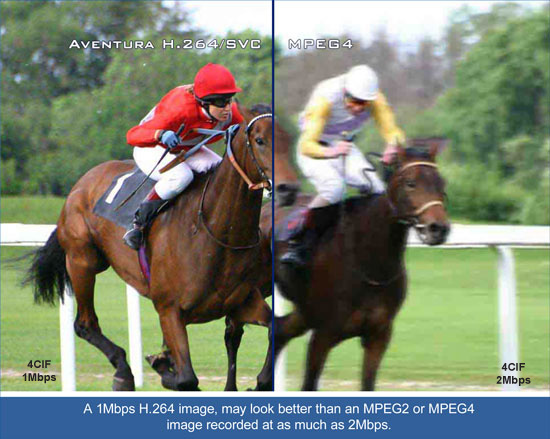

H.264 is getting so much attention because it can encode video with approximately 3 times fewer bits than comparable MPEG-2 encoders.

Because H.264 is up to twice as efficient as MPEG-4 Part 2 (natural video) encoding, it has recently been welcomed into the MPEG-4 standard as Part 10 – Advanced Video Coding. Many established encoder and decoder vendors are moving directly to h.264 and skipping the intermediate step of MPEG-4 Part 2.

Goals & Approach of H.264

Goals & Approach of H.264

The International Telecommunications Union (ITU) initiated the h.26L (for long term) effort in 1998 as a continuation of work following the MPEG-2 and h.263 standards. The overriding goal was to achieve a factor-of-2 reduction in bit rate compared to any competing standard.

Recall that MPEG-2 was optimized with specific focus on Standard and High Definition digital television services, which are delivered via circuit-switched head-end networks to dedicated satellite uplinks, cable infrastructure or terrestrial facilities. MPEG2’s ability to cope is being strained as the range of delivery media expands to include heterogeneous mobile networks, packet-switched IP networks, and multiple storage formats, and as the variety of services grows to include multimedia messaging, security, increased use of HDTV, and others. Thus, a second goal for h.264 was to accommodate a wider variety of bandwidth requirements, picture formats, and unfriendly network environments that throw high jitter, packet loss, and bandwidth instability into the mix.

The h.264 approach is a strictly evolutionary extension of the block-based encoding approach so well established in the MPEG and ITU standards. Key steps include:

- Use of Motion Estimation to support Inter-picture prediction for eliminating temporal redundancies

- Use of spatial correlation of data to provide Intra-picture prediction.

- Construction of residuals as the difference between predicted images and source images.

- Use of a discrete spatial transform and filtering to eliminate spatial redundancies in the residuals.

- Entropy coding of the transformed residual coefficients and of the supporting data such as motion vectors.

Major Features of H.264

Improved Inter-Prediction and Motion Estimation

First recall the limitations of motion estimation in MPEG-2, which searches reference pictures for a 16×16 set of pixels that closely matches the current macro block. The matching set of pixels must be completely within the reference picture. In contrast, H.264 provides:

- Fine-grained motion estimation. Temporal search seeks matching sub-macro blocks of variable size as small as 4×4, and finds the motion vector to _ pel resolution. Searches may also identify motion vectors associated with matching sub-macro blocks of 4×8, 8×4, 8×8, 8×16, 16×8, or the full 16×16. [In future, even finer 1/8 pel resolution will be supported.]

- Multiple reference frames. H.264 provides additional flexibility for frames to point to more than multiple frames – which may be any combination of past and future frames. This capability provides opportunities for more precise inter-prediction, but also improved robustness to lost picture data.

- Unrestricted motion search . Motion search allows for reference frames that may be partly outside the picture; missing data can be spatially predicted from boundary data. Users may choose to disable this feature by specifying a Restricted Motion search.

- Motion vector prediction. Where sufficient temporal correlation exists, motion vectors may be accurately predicted and only their residuals transmitted explicitly in the bitstream.

Such techniques not only provide for more accurate inter-prediction, but also help to partition and scale the bitstream with priority given to data that is more globally applicable. Thus, they not only improve compression but also resilience to errors and network instabilities.

Improved Intra Spatial Prediction and Transform

Because “intra prediction” is concerned with only one picture at a time, it relies upon spatial rather than temporal correlations. As the algorithm works through a picture’s macro blocks in raster scan order, earlier results may be used to “predict” the downstream calculations. Then we need only transmit residuals as refinements to the predicted results.

H.264 performs intra prediction in the spatial domain (prior to the transform, and it is a key part of the approach. Even for an intra-picture, every block of data is predicted from its neighbors before being transformed and coefficients generated for inclusion in the bitstream.

- Coarse versus fine intra prediction. Intra prediction may be performed either on 4×4 blocks, or 16×16 macro blocks. The latter is more efficient for uniform areas of a picture.

- Direction Dependent Intra Modes. By doing intra prediction in the spatial domain (rather than in the transform domain), h.264 can employ prediction that is direction dependent, and thus can focus on the most highly correlated neighbors. For Intra 16×16 coding and Intra 4 x 4 coding, there are 9 and 4 directional modes, respectively.

- 4×4 transform of Residual Data. For initially supported profiles, residual data transforms are always performed for 4×4 blocks of data, and coefficients transmitted on this fine-grained basis.

- Variable block sizes for spatial transform*. Future profiles will allow transform of variable size blocks (4×8, 8×8, etc.) with the same level of flexibility as motion estimation blocks. This will provide more flexibility and further reduction of bitrate.

- Integer transforms. Efficiency in both computation and bitrate is gained by implementing the traditional Discrete Cosine Transform (DCT) as an integer transform that requires no multiplications, except for a single normalization. It can also be inverted exactly without mismatch.

- Deblocking filter. To eliminate fine structure blockiness that might be aggravated by the smaller transform blocks, a context-sensitive deblocking filter smoothes out the internal edges. Its filter strength depends upon the prediction modes and relationship between the neighboring blocks. In addition to increasing signal-to-noise ratio (S/N), this technique significantly improves the subjective quality of the image for a given S/N.

Improved Algorithms for Encoding

Two alternative methods improve efficiency of the entropy coding process by selecting variable length codes depending upon context of the data being encoded.

- Context-Adaptive Variable Length Coding (CAVLC) employs multiple variable length codeword tables to encode transform coefficients, which consume the bulk of bandwidth. Based upon a priori statistics of already processed data, the best table is selected adaptively. For non-coefficient data, a simpler scheme is used that relies upon only a single table.

- Context-Adaptive Binary Arithmetic Coding (CABAC*) provides an extremely efficient encoding scheme when it is known that certain symbols are much more likely than others. Such dominant symbols may be encoded with extremely small bit/symbol ratios. The CABAC method continually updates frequency statistics of the incoming data and adaptively adjusts the algorithm in real-time. This method is an advanced option available in profiles beyond the baseline profile.

Techniques for Mitigation of Errors, Packet Losses, and Network Variability

Error containment and scalability

H.264 includes several other features that are useful in containing the impact of errors, and in enabling the use of scalable or multiple bit streams:

- Slice coding. Each picture is subdivided into one or more slices. The slice is given increased importance in H.264 as the basic spatial segment that is independent from its neighbors. Thus, errors or missing data from one slice cannot propagate to any other slice within the picture. This also increases flexibility to extend picture types (I, P, B) down to the level of “slice types.” Redundant slices are permitted.

- Data partitioning is supported to allow higher priority data (e.g., sequence headers) to be separated from lower priority data (e.g., B-picture transform coefficients).

- Flexible macro block ordering (FMO) can be used to scatter the bits associated with adjoining macro blocks more randomly throughout the bit stream. This reduces the chance that a packet loss will affect a large region and enables error concealment by ensuring that neighboring macro blocks will be available for prediction of a missing macro block.

- The Multiple Reference Frames that are used for improved motion estimation also allow for partial motion compensation for a P picture when one of its referenced frames is missing or corrupted.

SI and SP Pictures (or slices)*

MPEG-2 practice is to insert intra pictures (I) at regular intervals to contain errors that otherwise could propagate through the picture sequence indefinitely. In addition, intra-pictures provide a means for random access or fast-forward actions, because intra frames do not require any knowledge of other referenced frames. Similarly, regular I pictures would be necessary to switch promptly from between higher and lower bitrate streams – an important feature for accommodating the bandwidth variability in mobile networks. However, I pictures typically require far more bits than P pictures and thus are an inefficient means for addressing these two requirements.

H.264 introduces two new slice types, “Switching I Pictures” (SI) and “Switching P Pictures” (SP), which help address these needs with significantly reduced bit rate. Identical SP frames can be obtained even though different reference frames are used – thus, they can be substituted for I frames as temporal resynchronization points, but with significantly reduced bitrate. SP pictures rely upon the transformation and quantization of predicted inter blocks. Because SP pictures do not take full advantage of intra-prediction, at the cost of some bits they can be extended to SI pictures which do so.

Note that because slices are coded independently, switching slices (SI or SP) can be defined at that level.

Low Latency Feature

Arbitrary Slice Ordering (ASO) relaxes the constraint that all macro blocks must be sequenced in decoding order, and thus enhances flexibility for low-delay performance important in teleconferencing, surveillance and interactive Internet applications.

Simplified Profiles

H.264 is completely focused on efficient coding of natural video and does not directly address the object-oriented functionality, synthetic video, and other systems functionality in MPEG-4, which carries a very complex structure of over 50 profiles.

In contrast, H.264 is initially defined with only three profiles:

- Baseline Profile. A basic goal of H.264 was to provide a royalty-free baseline profile to encourage early application of the standard. The baseline profile consists most of the major features described above, with the exception of: B slices and weighted prediction; CABAC encoding; field coding; and SP & SI slices. Thus, the baseline profile is appropriate for many progressive scan applications such as video conferencing and video-over-IP, but not for interlaced television or multiple stream applications.

- Main Profile. Main profile contains all of the features in Baseline, except flexible macro block ordering (FMO), arbitrary slice order (ASO) and redundant slices. However, it adds field coding, B slices and weighted prediction, and CABAC entropy coding. This profile is appropriate for efficient coding of interlaced television applications where bit or packet error is not excessive, and where low latency is not a requirement.

- Extended Profile. This profile contains all features from the baseline profile and main profiles, except that CABAC is not supported. In addition, the Extended profile adds SP and SI for stream switching, and up to 8 slice groups. This profile is appropriate for server-based streaming applications where bit-rate scalability and error rate is very important. Security Applications and Mobile video services would be an example.

Where will H.264 have the biggest impact?

Any video application can benefit from a reduction in bandwidth requirements, but highest impact will involve applications where such reduction relieves a hard technical constraint, or which makes more cost-effective use of bandwidth as a limiting resource.

In addition, other h.264 features such error containment, error concealment, and efficient bitstream switching is especially useful for IP and wireless environments.

Squeeze More Services into a Broadcast Channel

Reduction in bandwidth requirements by factors of 2-3 provide cost savings for bandwidth-constrained services such as satellite and DVB-Terrestrial, or alternatively allow such providers to expand services at reduced incremental cost.

Facilitate High Quality Video Streaming over IP Networks

H.264 can produce very good quality, TV Quality streaming at less than 1Mbps (standard definition). This slips under 1 Mbps thresholds for xDSL and thus opens possibilities for new access methods for high quality, larger format video.

High Definition Transmission and Storage

Recall that MPEG-2 consumes 15-20 Mbps for High Definition video at suitable quality for broadcast or DVD. Use of h.264 will bring this down to about 8 Mbps, making it possible for bandwidth-strapped satellite service providers to fit 4 HD channels per QPSK channel.

Even more significant is that this reduction enables burning one HD movie onto a conventional DVD, thus avoiding the need for the industry to adapt a higher density (“blue laser”) DVD format.

Mobile Video Applications

3G Mobile networks present an unusual array of technical challenges that have driven many features in h.264. Applications include video conferencing, streaming video on demand, multimedia-messaging services, and low resolution broadcast. Some key issues, and h.264 tools for dealing with them, include:

- Low bandwidth (50 – 300 kbps) is the key issue. The expected trend is for 3G deployment to start with h.263 and move up to h.264 as it matures. An industry analyst points out “… 3G networks are only likely to offer 57.6kbit/s initially. As those bit rates increase, mobiles and networks will move to the new H.264 codec, which offers twice the performance of H.263. This should result in the same picture quality being achieved at half the bit rate.”

- Small devices with many formats; variability of available bandwidth. For streaming applications, these two separate issues can be addressed by providing multiple streams with different formats and bandwidths, and selecting the appropriate stream at run-time. H.264’s SP and SI pictures facilitate dynamic switching among multiple streams to accommodate bandwidth variability.

- High bit error rates, packet losses, and latency. For video applications, retransmissions are impractical for dropped or delayed packets, so h.264 provides several means (e.g., FMO, data partitioning, etc.) to contain error impacts and facilitate error concealment.

What is the relationship to MPEG-4 and MPEG-2?

Compared to MPEG-2

H.264 employs the same general approach as MPEG 1 & 2 as well as the h.261 and h.263 standards, but adds many incremental improvements to obtain coding efficiency improvement of about a factor-of-3.

MPEG-2 was optimized with specific focus on Standard and High Definition digital television services, which are delivered via circuit-switched head-end networks to dedicated satellite uplinks, cable infrastructure or terrestrial facilities. MPEG2’s ability to cope is being strained as the range of delivery media expands to include heterogeneous mobile networks, packet-switched IP networks, and multiple storage formats, and as the variety of services grows to include multimedia messaging, increased use of HDTV, and others. Thus, a second goal for h.264 was to accommodate a wider variety of bandwidth requirements, picture formats, and unfriendly network environments that throw high jitter, packet loss, and bandwidth instability into the mix.

Compared to MPEG-4

During 2002, the h.264 Video Coding Experts Group combined forces with MPEG4 experts to form the Joint Video Team (JVT), so H.264 is being published as MPEG-4 Part 10 (Advanced Video Coding).

MPEG-4 is really a family of standards whose overall theme is object-oriented multimedia applications. It thus has much broader scope than H.264, which is strictly focused on more efficient and robust video coding. The comparable part of MPEG-4 is Part 2 Visual (sometimes called “Natural Video”). Other parts of MPEG address scene composition, object description and java representation of behavior, animation of human body and facial movements, audio and systems.